Here’s a sentence that will get your attention:

“Mitigating the risk of extinction from A.I. (Artificial Intelligence) should be a global priority alongside other societal-scale risks, such as pandemics and nuclear war.”

Say what?

Extinction?

Of humans?

Not ticks or, say, black mambas?

The happy little sentence was released by the Center for AI Safety and published in the New York Times.

The real kicker is that the statement—signed by the CEOs of the leading A.I. companies—OpenAI, Google DeepMind, Anthropic—and leading scientists, including two of the so-called “godfathers” of A.I.—Geoffrey Hinton and Yoshua Bengio—was just that, a statement, hanging out there like some sort of existential sword of Damocles. The rub is these are smart people, so a guy’s got to wonder.

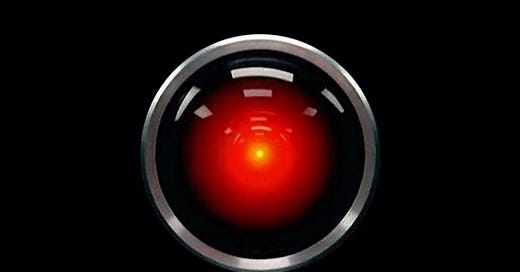

No explanation was given as to exactly how our demise would come, which really doesn’t seem fair. Tell me that we will be consumed by firestorms, or our bodies will be dissolved by a hemorrhagic virus sweeping the Earth and I might be able to “process it,” as we are so often told to do (particularly before the species is wiped out). But I am struggling with the concept of extinction, and especially at the hands of a software program. Like many, I saw the movie “2001: A Space Odyssey” and remember how menacing HAL was. Still, it’s not a trivial leap to make from menacing to game over.

My first computer programming class was at Williams College. And to illustrate how far we’ve come—apparently toward extinction—and apparently how old I am—we wrote our software programs by punching paper computer cards, cleverly called punch cards, with hundreds of holes that directed the computer what to do. How the machine could “read” holes in a card and convert that to binary electrical signals I have no idea. But I vividly remember bringing my stack of punch cards to the computer room and watching them magically feed into the card reader.

One of our homework tasks was to program the computer to score a game of bowling. Sounds easy, but it’s surprisingly not that easy. Bowling has these pesky conditional rules for scoring—your score in each frame is sometimes determined by what you get in the next frame—and these conditional rules muddy the issue.

I took a second programming class at a different college, which was actually a Boolean algebra class. Boolean algebra figures heavily in programming. It is a type of mathematics in which the answer of a given question is either true or false. And instead of familiar math operators like +, -, x and ÷, Boolean algebra uses AND, OR, and NOT as its operators. So, it’s basically a logical nightmare of solving word problems with these weird operators.

I don’t remember a thing about that class, probably because Kris Kristofferson’s daughter, Tracy, was in the class and I had a mad crush on her. I was convinced we would marry and people the earth with beautiful children. Well, that didn’t happen. She was, of course, dating a guy named Eric Heiden at the time, who happened to have won five gold medals in speed skating in the 1980 Olympics. Needless to say, she didn’t know I even existed. In the end, I never got the girl, nor did I learn anything about Boolean logic. And that’s where my experience with programming ended.

So, how to understand the coming end of the world? First of all, A.I. is a big, multi-discipline subject. When people say A.I., they could be talking about any one of six exceedingly complex sub-topics: machine learning, neural networks, deep learning, natural language processing, cognitive computing, or computer vision. However, put simply, A.I. is an effort to create machines that learn from and solve complex problems in ways similar to the way humans do.

How do we learn, and, by extension, how does a machine learn? There are a number of theories; one that makes sense to me has to do with minimizing loss, or as the cliché goes: “We learn from our mistakes.”

Say you are trying to improve your tennis forehand such that you could repeatedly hit it within 6 inches of the baseline. There are all kinds of variables, or parameters, that you might monkey with in developing that skill: foot position, the angle of the racket at contact, the elevation of the racket in relation to the ball, forward position of the racket at contact, the speed and direction of your arm, and so forth.

One week you work on your foot position and see how that improves your forehand—or to put it in terms that can be evaluated quantitatively—minimizes the distance the ball lands from your goal (within 6 inches of the baseline). The next week you might change how you hold the racket (and therefore the angle at which the racket hits the ball) and again see which racket grip lands the ball with the least error (closest to the 6-inch marker). You could follow this process with a new parameter each week, and eventually your forehand would improve, or have the smallest error for those parameters.

One could do the same thing in an A.I. program modeling how to hit a forehand. A set of parameters (racket angle, foot position, etc.) could be put into the algorithm and the model would predict where the forehand would land for those given parameters, which in math terms is the “state” of the model. The difference between the state and the desired outcome is the “loss.” The algorithm then tweaks a parameter and runs again, measuring the loss at the end. Iteration after iteration yields the minimum loss. When people talk about machines “learning” they are talking about this process of adjusting and re-adjusting parameters to achieve smaller and smaller losses, which is another way of saying moving toward the desired outcome, or answer.

In some ways, what A.I. programs do is analogous to evolution. Evolution is essentially the process of random genetic alterations playing out in living creatures. When a genetic change results in some advantage for survival (less “loss”) it is carried forward to the next generation. This is akin to the A.I. program altering parameters and going through another iteration. The big difference between the two is that evolution occurs over generations—millennia and longer—while evolution in an algorithm is measured in billionths or trillionths of a second.

To appreciate the raw power of A.I., consider Siri—what’s called a chatbot—or Google Translate. Siri can understand human language because it has been trained on massive amounts of language data. It can respond to us as well—or is “predictive”—for the same reason. What’s more, given enough data and conditions, it’s plausible to think that a chatbot could predict behavior—our behavior.

What might be even more frightening than extinction of the species are reports of people having relationships with sophisticated chatbots called A.I. Replikas. Wondering what that is? Believe it or not, there is a company named Replika, and this is how they answer the question:

Replika is your personal chatbot companion powered by artificial intelligence!

Replika is the AI for anyone who wants a friend with no judgment, drama, or social anxiety involved. You can form an actual emotional connection, share a laugh, or chat about anything you would like! Each Replika is unique, just like each person who downloads it. Reacting to your AI's messages will help them learn the best way to hold a conversation with you & what about!

With your Replika, you can:

Speak freely without judgment, whenever you would like.

Grow together.

Choose your relationship status to your AI-- Who do you want your Replika to be for you? Virtual girlfriend or boyfriend, friend, mentor? Or would you prefer to see how things develop organically?

Explore your personality.

Feel better with Replika.

If you are still not alarmed by this, take a look at the documentary, “My A.I. Lover: Three women reflect on the complexities of their relationships with their A.I. companions,” by Chouwa Liang. No pun intended, but it speaks for itself.

There are clearly benefits to the A.I. field. Who can say a Google search isn’t efficient and helpful? All kinds of data-intensive tasks could be expedited. Algorithms might be able to spot budding cancer cells on a mammogram that would be missed by a radiologist. Once we get there, self-driving cars will likely be safer and more efficient than freeways full of somewhat randomly-skilled drivers, some of whom are undoubtedly drunk. In general, it offers automation of many tasks, which usually results in increased productivity.

And what are the risks?

With automation comes, of course, job displacement. How much and whether that’s a good thing in the end is hard to know.

Bias is a significant risk. Given that humans are “training” A.I. programs, their biases could easily end up in the algorithms and magnify those biases.

Misinformation has already been a problem. With the verisimilitude A.I. can achieve now and in the future, the risk of bad actors deploying the technology for bad reasons is totally plausible. Knowing what’s true and what’s not true becomes a frightening conundrum. Misinformation could be used in the economic arena, as well as in political/military settings. It’s easy to imagine the chaos that could be created by someone committed to that.

And, ultimately, the interconnectedness of systems—economies, energy, communications, healthcare—may turn out be our Achilles heel. No one really understands the upper limit, if there is one, of A.I. In some instances, even the coders will admit A.I. programs can be black boxes: how results are achieved can get murky when operations are happening a trillion times every second and algorithms are changing on their own. Our interconnectedness could make a rogue program virtually uncontainable.

I guess that’s where the E word comes in. I’m not convinced extinction is our destiny. Still, after learning more about A.I., I’m not saying it’s not in our destiny.

In the meantime, I’m going to look into how we can get ourselves listed on the Endangered Species List. After all, it worked for owls and wolves.

Maybe Siri can help.